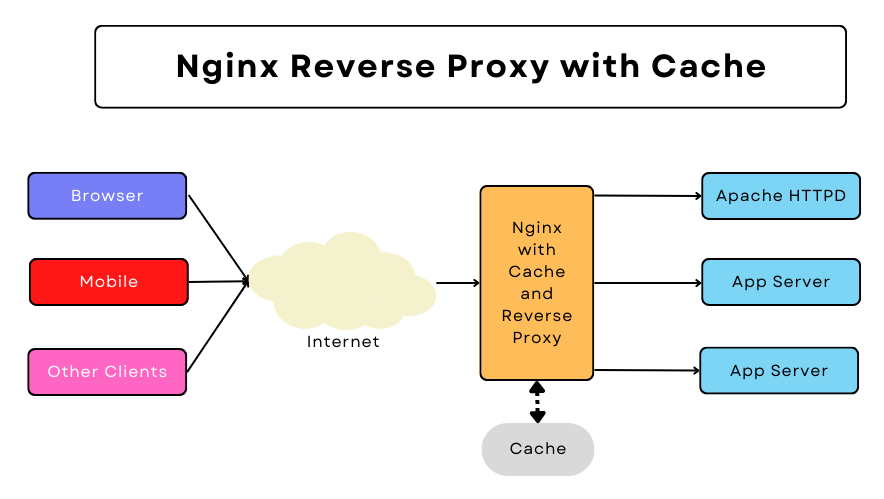

In this guide, I will discuss Nginx ngx_http_proxy and ngx_http_upstream modules, which provide the ability to proxy connections to backend application web servers and provide load-balancing capabilities. This proxying ability allows Nginx to pass requests to upstream webs servers for the final processing of a web request.

The ability to proxy requests to single or multiple upstream servers provides the ability to add load balancing, scalability, and security to a web application architecture.

I will look at setting up a proxy server with Nginx, using the upstream settings to talk to multiple Apache HTTPD web servers for load balancing and implement caching at the Nginx level to reduce the load on the upstream servers.

- Basic Terms

- Pre-Requisites

- Install Nginx

- Setup Nginx as a Reverse Proxy

- Configure Nginx Load Balancing

- Nginx Load Balancing Strategies

- Completed Virtual Host with Proxy File Code

- Add Nginx Content Caching to the Mix

- Example Nginx Virtual Host Configuration File with Proxy, Load Balancing and Caching Enabled

- Bonus: Advanced Configuration Settings

- Conclusion

Basic Terms

Content Cache

Content caching through Nginx, is a performance optimization mechanism in which Nginx first goes to its own cache to deliver content to clients. Only if it cannot find the data requested data that it proxies the request to the upstream server. Depending on the website the results may be that request serving goes up over 100x.

Reverse Proxy

A proxy server is an intermediary host that forwards requests for content from multiple clients to one or more servers across a network. A reverse proxy is a sub-type of a proxy server that directs client requests to the appropriate backend server. Reverse proxies are typically utilized to provide an additional level of abstraction and control to network traffic between clients and servers.

Common uses for reverse proxies include filtering, load balancing, security, and content delivery acceleration through caching.

Upstream Server

In general networking terms, an upstream server refers to a server that provides service to another server. In the scope of web applications, an upstream server sits behind a proxy server. An upstream server is also referred to as an Origin server.

Pre-Requisites

Before starting on the exact configuration steps, I am assuming that you have the following up and running.

- Operating System: BSD, Linux, or Windows is installed.

- You have an upstream web server installed, such as an Apache HTTPD web server or Apache Tomcat. (Note: You may need admin rights to view log files and manage the server). You need to change the port Apache is listening to. If unsure how to do this, check out the FAQ item before proceeding. I will assume your upstream servers are listening on port 8080.

Note: For this tutorial, I am assuming the following URLs for your servers.

Nginx: http://localhost

Apache HTTPD or Tomcat hosts

- Host A: http://hosta:90

- Host B: http://hostb:90

You can rename hosta and hostb to your actual server names.

Install Nginx

Installing on Debian/Ubuntu

On the command shell issue the following command to install Nginx.

user@mars:~$ sudo apt install nginxNote: By default, Nginx listens on port 80, the same as that of the Apache server. You will need to change the Apache port number to 8080.

To start and stop Nginx you will need to remember the following.

user@mars:~$ sudo service nginx stop ### Stop the server

user@mars:~$ sudo service nginx restart ### Start the service

### You can also use the following to start and stop Nginx with a single command ###

user@mars:~$ sudo service nginx restartInstall Nginx on Windows

To install it on Windows go to the download section of the Nginx website and download the stable release. Install it anywhere you want on the system.

You will need to remember the following command to run and stop the server on Windows.

C:\apps\nginx>start nginx.exe ### This will start the Nginx server in the background.

C:\apps\nginx>nginx.exe -s stop ### Stop the Nginx server.Setup Nginx as a Reverse Proxy

On a clean install of Nginx on Ubuntu, you will have a website set up with the name default. The file is installed in the following path.

user@mars:~$ ls -l /etc/nginx/sites-available/

total 32

-rw-r--r-- 1 root root 3015 Apr 10 2022 defaultBasic Proxy_Pass Setup

For a basic setup I will edit the virtual host file /etc/nginx/sites-available/default, replacing existing content and copying the following.

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://127.0.0.1:90;

}

}Note: On Windows, you copy the server block code above to the config file located at:

C:\{path to Nginx install}\conf\nginx.confLet’s go over each of these lines.

- Line 3: Sets up the server name. I am listening for localhost. You can change this to the domain or hostname you want Nginx to serve.

- Line 5: Route all requests from

rootof the domain. - Line 6: This is the key. You are instructing Nginx to proxy all requests to the upstream server on 127.0.0.1 listening on port 90. Although you can use hostnames instead of IP addresses.

Playing with Proxy Header Values

With the basic setup shown above, Nginx does not forward all the headers from the client to the upstream servers. Assuming the client is connecting from IP 230.40.200.10, the actual IP seen by the upstream server will be the IP of the Nginx server. If you were to monitor the Apache log you will find the following entry in the log file.

127.0.0.1 - - [14/Nov/2022:12:52:18 -0800] "GET / HTTP/1.0" 200 570It shows the IP of the Nginx server.

This happens because Nginx during request proxying changes some of the request headers received from the client. The following rules are applied.

- Removes empty headers.

- Headers with underscores are removed by default. If you want to forward these upstream you will need to enable

underscores_in_headersdirective. Valid options areonandoff. - The host header is rewritten to the

$proxy_hostvariable. This will be a value as defined byproxy_passdirective.

My config file with these headers will look like this.

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;With these changes when I connect through Nginx to the upstream servers my Apache server log file correctly show the IP I am using. [Remember to restart Nginx after making changes to the config file.]

192.168.0.18 - - [14/Nov/2022:12:58:18 -0800] "GET / HTTP/1.0" 200 570Configure Nginx Load Balancing

To enable load-balancing I need to make changes to the virtual host file (on Ubuntu) or the nginx.conf file on Windows. This will require two edits.

- Create an

upstreamconfiguration block to define all the backend servers Nginx should balance between. - Update

proxy_passdirective to use theupstreamblock.

Nginx Load Balancing Strategies

Nginx provides four strategies for load balancing.

- Round Robin: This is the default strategy. This algorithm uses sends a request to each server in the upstream block list before sending the next request to the initial host. For example, if you are using 2 upstream (or origin) servers then the first request will go to

hosta, the second tohostband the third tohostaand so on. - Hash: Specifies a load-balancing method for a server group where the client-server mapping is based on the hashed key value. Adding or removing a server from the group may result in remapping most of the keys to different servers. The method is compatible with the Cache::Memcached Perl library.

- IP_Hash: Requests are distributed between servers based on client IP addresses. The first three octets of the client IPv4 address, or the entire IPv6 address, are used as a hashing key. The method ensures that requests from the same client will always be passed to the same server except when this server is unavailable.

- Random: A strategy where a request is passed to a randomly selected server, taking into account the value of

weight. The optionaltwoparameter instructs Nginx to randomly select two servers and then choose a server using the specified method,least_connorleast_time. least_conn passes a request to a server with the least number of active connections whereas least_time passes a request to a server with the least average response time and least number of active connections.

Upstream Block

Nginx upstream configuration block is used to provide a list of backend servers used to proxy the requests. You can also define other values such as a load-balancing strategy.

Below is an example of the upstream configuration block I have used for this guide. This will go in the server section.

upstream loophosts {

server hosta:90;

server hostb:90;

}The above configuration adds two upstream servers, hosta and hostb listening on port 90. I have named the upstream block loophosts.

There is another option, weight you can also set. In the example shown below, I am configuring Nginx to send every third request to hostb.

upstream loophosts {

server hosta:90 weight 2;

server hostb:90 weight 1;

}I can also use the backup option, which marks the server as a backup server. It will be passed requests when the primary servers are unavailable.

upstream loophosts {

server hosta:90 weight 2;

server hostb:90 weight 1;

server backuphost:90 backup;

}You can check other options in the Nginx documentation of the upstream module.

Updating Proxy_Pass Directive to Use Upstream Server

In its nature, the Nginx load balancer is actually a form of reverse proxy server. It will behave exactly like a reverse proxy with only one difference: it can “round-robin” between multiple backend servers. To use the effect of the upstream block we should call it from within a location block:

location / {

proxy_pass http://loophosts;

}Completed Virtual Host with Proxy File Code

With all the changes as shown above my configuration file will be:

upstream loophosts {

server hosta:90 weight 2;

server hostb:90 weight 1;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://loophosts;

}

}Add Nginx Content Caching to the Mix

I have a complete tutorial with test cases showing how you can enable Nginx caching. Here I will provide the caching options you can use with the configuration file above. Add the following lines to your location block.

proxy_cache tmpcache;

proxy_cache_key $scheme://$host$request_uri;

proxy_cache_methods GET HEAD POST;

proxy_cache_valid 200 1d;

proxy_cache_use_stale error timeout invalid_header updating http_500 http_502 http_503 http_504;The most important parts to note regarding the code above are lines 1 and 2.

- proxy_cache: Set the physical location to store cached content.

- proxy_cache_key: If you have multiple virtual hosts on Nginx then you need to add this line. If ignored then there will be cache clashes as content from different domains will likely clash because of similar hash keys, corrupting client responses.

Example Nginx Virtual Host Configuration File with Proxy, Load Balancing and Caching Enabled

My completed configuration file is.

proxy_cache_path /tmp/nginxcache levels=1:2 keys_zone=tmpcache:1000m;

upstream loophosts {

server hosta:90 weight 2;

server hostb:90 weight 1;

}

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://loophosts;

proxy_cache tmpcache;

proxy_cache_key $scheme://$host$request_uri;

proxy_cache_methods GET HEAD POST;

proxy_cache_valid 200 1d;

proxy_cache_use_stale error timeout invalid_header updating http_500 http_502 http_503 http_504;

}

}Bonus: Advanced Configuration Settings

Let’s look at some advanced ways for using various proxy_pass settings.

Using Buffers with Proxy_Pass and Upstream Origin Servers

By default, Nginx buffers the contents of the HTTP response before sending it back to the client. This is done assuming that different clients have different network speeds. You can set buffering to off by using the following in the server block.

proxy_buffering off;To optimize your buffering setting following options are available as well.

proxy_buffering on;

proxy_buffer_size 1k;

proxy_buffers 24 4k;

proxy_busy_buffers_size 16k;

proxy_max_temp_file_size 1048m;

proxy_temp_file_write_size 64k;Using Slash (/) in the URLs

You need to be aware of issues with inconsistent use of slash as unexpected URL mapping errors. Below table shows one scenario where mapped request will result in an incorrect path.

| location | proxy_pass | Request | Received by upstream |

|---|---|---|---|

| /app/ | http://localhost:90/ | /app/foo?bar=car | /foo?bar=car |

| /app | http://localhost:90/ | /app/foo?bar=car | //foo?bar=car |

To eliminate these issues, review your setting as the 4xx and 5xx errors may be caused by these mappings.

Using RegEx in Directives

RegEx mapping is very powerful for rewriting URLs with the proxy_pass directive. In the example shown below if the public URL is http://localhost/location/homes/111, and your upstream is http://localhost:90/location_api?homes=111. Then using the following Regex value will map the request correctly.

location ~ ^/api/location/([a-z]*)/(.*)$ {

proxy_pass http://127.0.0.1:90/location_api?$1=$2;

}Conclusion

I hope you found this post helpful. Just like Apache web server is hosting the internet, Nginx is helping it scale.

You can learn more about Apache web server and Nginx web server on our website.

Let me know if you have any questions.