Web servers like Apache, IIS, and Nginx are designed to serve client requests over the HTTP protocol. In that process, they can save information about requests and responses in logging files using standard log formats. The known formats are used across different web servers making it easy to understand logging information.

See Also

In this post, I will go over multiple ways to parse and visualize Apache and Nginx web server log data with Java.

Understanding Apache and Nginx Common Log Formats

Before diving into parsing web server logs with Java, it’s crucial to understand the structure and format of Apache and Nginx log files. Both web servers have default log formats that can be customized as needed.

Some of the common log formats are COMMON, COMBINED, and NCSA log formats. Working with standard log formats makes it easy to understand log file entries with ease. Many free and paid commercial log parsing tools also understand these standard logging formats.

Apache Web Server Log Format

Apache web server has two primary log formats, each serving a specific purpose:

| Log Format | Description |

|---|---|

| Common Log Format (CLF) | The CLF is the default log format for Apache web servers. Each line in the log file represents a single request and contains information such as the client’s IP address, date and time, request method, requested resource, HTTP status code, and the number of bytes sent in response. CLF Log Format %h %l %u %t \"%r\" %>s %b |

| Combined Log Format | The Combined Log Format extends the CLF by adding two more fields – the referrer and the user agent. This additional information can be useful for understanding the origin of requests and the type of clients accessing the web server. Combind Log Format %h %l %u %t \"%r\" %>s %b \"%{Referer}i\" \"%{User-agent}i\" |

Note: In certain cases, you may want to log additional details not provided with the standard log formats. In this case, you can customize the log formats to output additional details . Check out my post on Apache Log formats and available options for customization.

Nginx Log Formats

Nginx has a default access log format, which is similar to Apache’s CLF but with a few variations:

| Log Format | Descriptoin |

|---|---|

| Access log format | The default log format for Nginx includes fields such as the client’s IP address, date and time, request method, requested resource, HTTP status code, response size, and other useful information. Nginx Access Log Format $remote_addr - $remote_user [$time_local] \"$request\" $status $body_bytes_sent \"$http_referer\" \"$http_user_agent\" |

| Custom Log Format | Nginx allows users to create custom log formats to include additional information or modify the order of fields in the log file. You can configure this using the log_format directive in the Nginx configuration file. |

Key Differences Between Apache and Nginx Log Formats

While Apache and Nginx log formats share many similarities, there are some notable differences:

| Syntax | Apache uses a different syntax for its log format, which relies on percent signs and curly braces, whereas Nginx uses variables denoted by dollar signs. |

| Column Order | Apache’s Combined Log Format and Nginx’s default log format include referrer and user agent fields, but their position and syntax differ. |

| Log Directives | Customizing log formats is done differently in Apache and Nginx. Apache uses the LogFormat directive, while Nginx uses the log_format directive. |

Understanding these log formats is essential for creating a Java parser that can accurately process both Apache and Nginx log files.

In this post, though I will be parsing the COMMON log format entries.

Java Libraries For Parsing Log Files

Parsing web server logs involves reading log files, processing each log entry, and extracting relevant information. Although you can accomplish these tasks using native Java libraries, third-party libraries can simplify the process and improve performance. In this section, we’ll discuss popular Java libraries for parsing log files:

Standard Java I/O API

Java’s built-in I/O API provides classes and interfaces for handling file reading and writing operations. These classes, such as FileReader, BufferedReader, and BufferedWriter, form the foundation for reading and processing log files.

Apache Commons I/O

Apache Commons IO is a powerful open-source library that offers utility classes and methods for handling I/O operations more efficiently. It simplifies common tasks, such as reading entire files into memory, iterating over lines in a file, or streaming large files. Some of its key classes include FileUtils, IOUtils, and LineIterator.

- FileUtils: The FileUtils class provides static utility methods for working with files and directories, such as reading a file into a string, copying files or directories, and deleting files or directories.

- LineIterator: The LineIterator class allows you to iterate over the lines in a file without reading the entire file into memory, which is useful for processing large log files.

- IOUtils: The IOUtils class offers static utility methods for handling input and output streams, such as converting streams to strings or byte arrays, and closing streams safely.

Google Guava

Google Guava is a popular open-source Java library that provides a rich set of utility classes and methods for various programming tasks, including file I/O operations. Guava’s Files and CharStreams classes simplify file reading and writing tasks.

- Files: The Files class offers utility methods for handling file operations, such as reading a file into a string, creating a temporary file, or copying files.

- CharStreams: The CharStreams class provides utility methods for working with character streams, such as converting a reader to a string, reading lines from a reader, and writing a string to a writer.

Other Useful Java Libraries

Depending on your log parsing requirements and goals, you may want to explore additional Java libraries, such as:

- Logstash Logback Encoder: A library for parsing and processing log files in Logstash-compatible JSON format.

- Jackson: A high-performance JSON processing library for parsing log files in JSON format or exporting parsed data to JSON.

By leveraging these libraries, you can simplify the process of parsing Apache and Nginx log files, reduce boilerplate code, and improve the performance of your log analysis application.

Step 1: Reading Log Files with Java

To parse Apache and Nginx log files, you’ll first need to read the files using Java. In this section, we’ll explore different methods of reading log files using Java’s built-in I/O API, Apache Commons IO, and Google Guava.

Read Apache Log File With Standard Java I/O

Java’s built-in I/O API provides classes for reading and writing files. The following example demonstrates how to read a log file line by line using FileReader and BufferedReader:

import java.io.BufferedReader;

import java.io.FileReader;

import java.io.IOException;

public class LogFileReader {

public static void main(String[] args) {

String logFilePath = "/path/to/logfile.log";

try (FileReader fileReader = new FileReader(logFilePath);

BufferedReader bufferedReader = new BufferedReader(fileReader)) {

String line;

while ((line = bufferedReader.readLine()) != null) {

// Process log entry

System.out.println(line);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}In the example above, we use the try-with-resources statement to automatically close the FileReader and BufferedReader instances when they are no longer needed. This helps prevent resource leaks and ensures proper cleanup of system resources.

Read Apache Log File Using Apache Commons I/O

Apache Commons IO provides utility classes that simplify file reading tasks. The following example demonstrates how to read a log file line by line using FileUtils and LineIterator:

import org.apache.commons.io.FileUtils;

import org.apache.commons.io.LineIterator;

import java.io.File;

import java.io.IOException;

public class LogFileReader {

public static void main(String[] args) {

File logFile = new File("/path/to/logfile.log");

try (LineIterator lineIterator = FileUtils.lineIterator(logFile, "UTF-8")) {

while (lineIterator.hasNext()) {

String line = lineIterator.nextLine();

// Process log entry

System.out.println(line);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}Read Apache Log File with Google Guava

Google Guava provides utility classes for file reading tasks, as well. The following example demonstrates how to read a log file line by line using Guava’s Files and CharStreams classes:

import com.google.common.io.CharStreams;

import com.google.common.io.Files;

import java.io.BufferedReader;

import java.io.File;

import java.io.IOException;

import java.io.Reader;

import java.nio.charset.Charset;

public class LogFileReader {

public static void main(String[] args) {

File logFile = new File("/path/to/logfile.log");

try (Reader reader = Files.newReader(logFile, Charset.forName("UTF-8"));

BufferedReader bufferedReader = CharStreams.newReaderSupplier(reader)) {

String line;

while ((line = bufferedReader.readLine()) != null) {

// Process log entry

System.out.println(line);

}

} catch (IOException e) {

e.printStackTrace();

}

}

}By using one of these methods to read log files with Java, you can begin processing and analyzing log data from Apache and Nginx web servers.

Step 2: Parsing Log File Entries With Java

Once you’ve read the log files, the next step is to parse each log entry and extract the relevant information. In this section, we’ll explore two methods for parsing log entries with Java: using regular expressions and the String.split() method.

Using Regular Expressions

Java’s Pattern and Matcher classes provide robust support for working with regular expressions. The following example demonstrates how to parse Apache and Nginx log entries using regular expressions:

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class LogEntryParser {

private static final String LOG_ENTRY_PATTERN = "^(\\S+) (\\S+) (\\S+) \\[([\\w:/]+\\s[+\\-]\\d{4})\\] \"(.+?)\" (\\d{3}) (\\S+) \"(.*?)\" \"(.*?)\"$";

private static final Pattern pattern = Pattern.compile(LOG_ENTRY_PATTERN);

public static void main(String[] args) {

String logEntry = "127.0.0.1 - - [09/Apr/2023:09:01:15 -0700] \"GET / HTTP/1.1\" 200 2326 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36\"";

Matcher matcher = pattern.matcher(logEntry);

if (matcher.matches()) {

String ipAddress = matcher.group(1);

String timestamp = matcher.group(4);

String requestLine = matcher.group(5);

String statusCode = matcher.group(6);

String referrer = matcher.group(8);

String userAgent = matcher.group(9);

// Process parsed data

System.out.println("IP Address: " + ipAddress);

System.out.println("Timestamp: " + timestamp);

System.out.println("Request Line: " + requestLine);

System.out.println("Status Code: " + statusCode);

System.out.println("Referrer: " + referrer);

System.out.println("User Agent: " + userAgent);

} else {

System.out.println("Invalid log entry format");

}

}

}The regular expression used in the example above is designed to work with both Apache’s Combined Log Format and Nginx’s default log format. You may need to adjust the regular expression to match your specific log format if you have customized it.

An alternative approach to parsing log entries is to use the String.split() method. This method splits a string into an array of substrings based on a specified delimiter. The following example demonstrates how to parse Apache and Nginx log entries using the String.split() method:

public class LogEntryParser {

public static void main(String[] args) {

String logEntry = "127.0.0.1 - - [09/Apr/2023:09:01:15 -0700] \"GET / HTTP/1.1\" 200 2326 \"-\" \"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36\"";

String[] logEntryParts = logEntry.split(" (?=([^\"]*\"[^\"]*\")*[^\"]*$)");

if (logEntryParts.length == 9) {

String ipAddress

Step 3: Storing Parsed Log File Data

After parsing log entries, it’s essential to store the extracted data in a structured format for further analysis and processing. In this section, we’ll discuss creating custom Java objects and using data structures to store parsed log data.

Creating Custom Java Objects

One way to store parsed log data is to create custom Java objects, which allows for better organization and easier data manipulation. The following example demonstrates how to create a LogEntry class to represent a single log entry:

public class LogEntry {

private String ipAddress;

private String timestamp;

private String requestLine;

private int statusCode;

private long responseSize;

private String referrer;

private String userAgent;

public LogEntry(String ipAddress, String timestamp, String requestLine, int statusCode, long responseSize, String referrer, String userAgent) {

this.ipAddress = ipAddress;

this.timestamp = timestamp;

this.requestLine = requestLine;

this.statusCode = statusCode;

this.responseSize = responseSize;

this.referrer = referrer;

this.userAgent = userAgent;

}

// Getters and setters for each field

// Additional methods for data manipulation or analysis

}The LogEntry class has fields for storing information such as IP address, timestamp, request line, status code, response size, referrer, and user agent. You can customize this class to include additional fields, depending on your log format and analysis requirements.

Once you have created the LogEntry class, you can use Java data structures to store multiple log entries. Common data structures include ArrayList, LinkedList, and HashSet:

import java.util.ArrayList;

import java.util.List;

public class LogDataStorage {

public static void main(String[] args) {

List<LogEntry> logEntries = new ArrayList<>();

// Add parsed LogEntry objects to the list

}

}import java.util.LinkedList;

import java.util.List;

public class LogDataStorage {

public static void main(String[] args) {

List<LogEntry> logEntries = new LinkedList<>();

// Add parsed LogEntry objects to the list

}

}

import java.util.HashSet;

import java.util.Set;

public class LogDataStorage {

public static void main(String[] args) {

Set<LogEntry> logEntries = new HashSet<>();

// Add parsed LogEntry objects to the set

}

}

The choice of data structure depends on the specific log analysis tasks you plan to perform. For example, if you need fast random access to log entries, an ArrayList would be appropriate. If you need to ensure uniqueness among log entries, a HashSet would be a better choice.

Step 4: Analyzing and Visualizing Parsed Log Data

Once the log data has been parsed and stored in a structured format, you can perform various analysis tasks and visualize the results for better insights. In this section, we’ll discuss some common log analysis tasks and introduce Java libraries for data visualization.

Common Log Analysis Tasks

Here are some common log analysis tasks you might perform using the parsed log data:

- Count the number of requests per IP address

- Determine the most frequent status codes

- Analyze the distribution of request methods (GET, POST, PUT, DELETE, etc.)

- Identify the most requested resources or endpoints

- Calculate the average response time or response size

- Detect patterns of suspicious activities, such as repeated failed login attempts or unusually high request rates

Java Libraries For Data Visualization

Visualizing the results of your log analysis can make it easier to identify trends, patterns, and anomalies. There are several Java libraries available for creating charts, graphs, and other visualizations, including:

| Name | Description |

|---|---|

| JFreeChart | JFreeChart is a popular open-source library for creating a wide variety of charts, including bar charts, line charts, pie charts, and more. It supports various output formats, such as PNG, JPEG, and SVG, and can be easily integrated into Java applications, applets, or web applications. |

| XChart | XChart is a lightweight, easy-to-use library for creating charts in Java applications. It offers a simple API for creating various types of charts, such as line charts, scatter plots, bar charts, and pie charts. The library supports real-time updates, customizable styling, and multiple output formats. |

| JavaFX | JavaFX is a software platform for creating rich internet applications (RIAs) with Java. It includes a set of graphics and media packages for creating interactive 2D and 3D visualizations. JavaFX’s Chart API provides support for various types of charts, including line charts, area charts, bar charts, pie charts, and bubble charts. |

Example: Visualizing request distribution

Suppose you want to visualize the distribution of request methods (GET, POST, PUT, DELETE, etc.) in your parsed log data. You can use the JFreeChart library to create a pie chart representing the distribution:

Add the JFreeChart library to your project using Maven or Gradle, or by manually downloading the JAR file from the JFreeChart website.

import org.jfree.chart.ChartFactory;

import org.jfree.chart.ChartPanel;

import org.jfree.chart.JFreeChart;

import org.jfree.data.general.DefaultPieDataset;

import org.jfree.data.general.PieDataset;

import javax.swing.JFrame;

public class RequestDistributionVisualization extends JFrame {

public RequestDistributionVisualization() {

PieDataset dataset = createDataset();

JFreeChart chart = ChartFactory.createPieChart("Request Method Distribution", dataset, true, true, false);

ChartPanel chartPanel = new ChartPanel(chart);

setContentPane(chartPanel);

}

private PieDataset createDataset() {

DefaultPieDataset dataset = new DefaultPieDataset();

// Replace the following values with your actual log data analysis results

dataset.setValue("GET", 75);

dataset.setValue("POST", 20);

dataset.setValue("PUT", 3);

dataset.setValue("DELETE", 2);

return dataset;

}

public static void main(String[] args) {

RequestDistributionVisualization visualization = new RequestDistributionVisualization();

visualization.pack();

visualization.setVisible(true);

visualization.setDefaultCloseOperation(JFrame.EXIT_ON_CLOSE);

}

}By analyzing and visualizing parsed log data, you can gain valuable insights into the performance, security, and usage patterns of your Apache and Nginx web servers.

Example: Integrating Visualization Libraries With Web Applications

In addition to using visualization libraries in standalone Java applications, you can also integrate them with web applications to display visualizations in a browser. For example, you can use the following libraries to create interactive charts and graphs in your Java web applications:

| Name | Description |

|---|---|

| PrimeFaces | PrimeFaces is a popular UI component library for JavaServer Faces (JSF) applications. It offers a rich set of components, including various chart types, such as line, bar, pie, area, and more. PrimeFaces charts are based on the popular JavaScript charting library Chart.js, which provides interactive and responsive visualizations. |

| Thymeleaf + JavaScript charting libraries | If you are using Thymeleaf as a server-side Java template engine, you can easily integrate JavaScript charting libraries, such as Chart.js, Highcharts, or D3.js, to create interactive and responsive visualizations in your web application. |

| Vaadin | Vaadin is a Java framework for building modern web applications with a focus on simplicity and type-safety. It provides a set of built-in components, including charts and graphs, which can be easily integrated into your application. Vaadin charts are based on the Highcharts JavaScript library, offering a wide range of chart types and customization options. |

By integrating these visualization libraries with your web application, you can provide your users with an interactive and engaging experience, making it easier for them to understand and analyze the log data from your Apache and Nginx web servers.

Example: Parsing Logs with Java

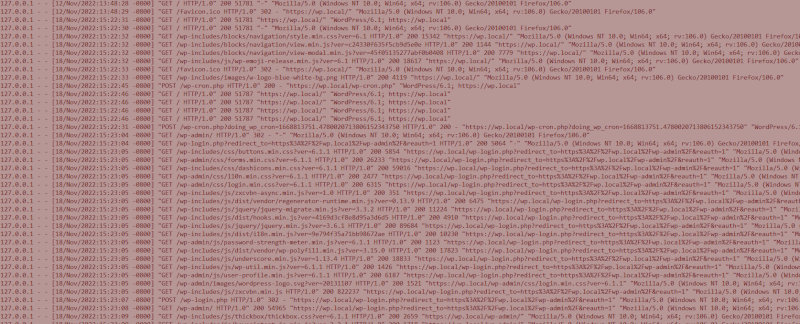

In this example, though I will be reading the log file and streaming through each line, looking for entries with IP 127.0.0.1 and getting a count of all entries with HTTP code 200.

To start writing a Java program to parse a log file, I choose to go with the standard command line program. You can access the test code on Github, which I have uploaded, and use that as a base to start.

Basically, I pass a config file as a parameter to the program which defines various parameters. My config files have the following entries.

server=apache # Name of the server. Could be Apache or Nginx.

logtype=access # Looking at access log. The other option is error log.

logformat=common # Log file format. I have only coded for the common log format for now.

logfilename=/var/logs/apache2/access.log # path to log file.As one develops the program further one can add additional options to the config file but for now that is all I have.

Common Log Format RegEx

I am using the following regex to parse the common log format.

String COMMON_LOG_FORMAT = "^(\\S+) (\\S+) (\\S+) \\[([\\w:/]+\\s[+\\-]\\d{4})\\] \"(\\S+) (\\S+)\\s*(\\S+)?\\s*\" (\\d{3}) (\\S+)";Java Parsing Loop for Log Entries

My java function to parse the log entries looks like this.

public void filterWithRegex() throws IOException {

String regString = Config.props.getProperty("search-expression");

final Pattern linePattern = Pattern.compile(regString); // For filtering.

final Pattern columnPattern = Pattern.compile(COMMON_LOG_FORMAT, Pattern.MULTILINE);

try ( Stream<String> stream = Files.lines(logfile.getLogFile())) {

var countIP = new HashMap<String, Integer>();

stream.forEach(line -> {

Matcher matcher = columnPattern.matcher(line);

while (matcher.find()) {

String IP = matcher.group(1);

String Response = matcher.group(8);

int response = Integer.parseInt(Response);

// Inserting the IP addresses in the HashMap and maintaining the frequency for each HTTP 200 code.

if (response == 200) {

if (countIP.containsKey(IP)) {

countIP.put(IP, countIP.get(IP) + 1);

} else {

countIP.put(IP, 1);

}

}

}

});

// Print result

for (Map.Entry entry : countIP.entrySet()) {

System.out.println(entry.getKey() + " " + entry.getValue());

}

}

}In the code above, I am reading through the lines, parsing them one at a time. Here I am searching for a log with a specific IP and a response code of 200.

Results

The results I get are:

127.0.0.1 464Ok, so not very nicely formatted but it shows that for an IP of 127.0.0.1, there are 464 total responses with an HTTP code of 200.

Conclusion

As you can see parsing a web server log file from Apache or Nginx is not very hard and the code I have provided does a good job of it. You can add features to it and do a lot more than what I have shown. But this is not the path that I recommend.

There are tools available that are free or in the case of commercial offerings, have a nominal cost which allows you to do a lot more with log analysis and filtering than you can do by writing your own code.

Check out the main webpage on all posts and guides related to the Apache web server and NGINX web server.